There are a few reasons why I stopped writing music reviews.

First, it took up too much time. I was obsessive in my approach, doggedly insisting that listening to an album at least thrice through and researching the entire artist’s back catalog was the bare minimum prerequisite for giving a score. It was untenable.

Second, I got bored. Maybe that speaks to my limitations as a writer, but there are only so many ways to describe yet another “sophomore effort.” Going rogue on form only added to the load.

Third, I’m sick to death of giving scores.

Who is the score helping? What’s the difference between a 6.9 and a 7.1? Why are we endlessly reducing art to flat decimal places?

This might sound like distinction without a difference (and an unbelievably pretentious one, at that), but I don’t review music now; I curate it. And when I do — whether in playlists, posts, or best-of lists — I don’t assign scores. I don’t even assign rank; my lists have evolved from numbered chaos to simple alphabetical order.

Still, I can’t pretend that ratings and scores don’t matter.

I can’t make the foundation of music (and film, and book) criticism go away just because I personally dislike it.

But I can definitely point out how silly it is.

Tennis vs. Pitchfork

If you didn’t know about the band Tennis last week, I bet you do now.

Taking potshots at Pitchfork is practically a national pastime at this point, but last weekend, they raised it to a whole new level:

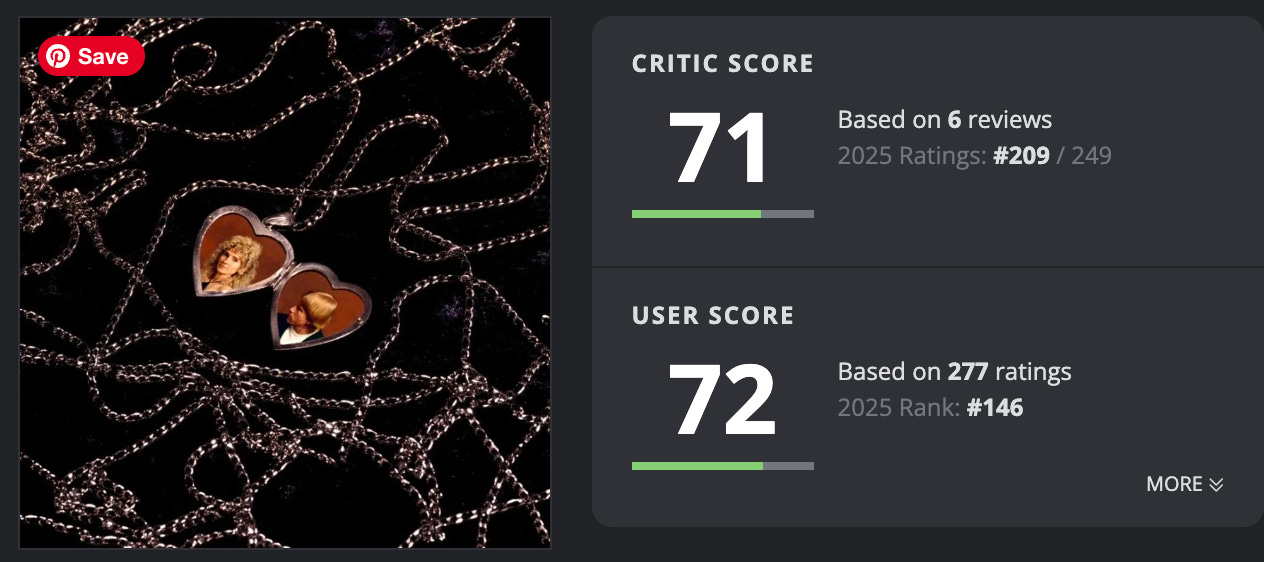

Pitchfork scored Tennis’ latest (and final) album a 6.8. The duo didn’t take it on the chin.

They felt that they deserved better, and that this review (and all the reviews that Pitchfork has ever given them) was flawed for a number of perceived sins1:

The critic didn’t fully engage with their music in his review…

…which led to frequent interpretive mistakes.

The critic expected too much, so the score was based on unfair standards.

They’ve received essentially the same score for all seven of their albums.

There are “no actionable takeaways” for the band.

There’s a lot to unpack here — too much for one post — but regardless of whether I agree with the bulk of their grievances (or how they made them), I do understand their frustration.

You can decide for yourself if Tennis deserved a better score, or whether clapping back at Pitchfork was warranted, but my questions are a little different:

1. Would Tennis have been upset if they had received an 8.1 (or some other high score), but with the exact same substantive review and all its faults?

2. What does a 6.8 tell you about an album? Does it imply it’s not worth listening to?

Swimming in a Sea of Sevens

I completely agree with Tennis that “[n]ot everyone can or should be a Joni Mitchell or a Leonard Cohen.”

Plenty of albums deserve good ratings even if they aren’t groundbreaking.

The thing is, though… they already get them. In fact, there’s an argument to be made that critics aren’t harsh enough.

Virtually every single record that receives any kind of review at all receives a positive one.

I’ll repeat my exercise from the end of last year: as of this writing, there are 249 albums ranked on albumoftheyear.org. Of those, 216 — nearly 87% — have an average rating of 70/100 or above.

I used to think that the entire music industry had collectively decided to use the American school grading system to score albums, effectively ignoring the bottom half of the scale. I no longer believe that’s true — I just think it’s a self-selection problem. The albums getting those scores are genuinely “pretty good but not stellar,” which is certainly how I’d define a 7/10.

It makes sense that we’re swimming in a sea of sevens. It also stands to reason why a band might take issue with scoring just a hair below that. It’s very difficult to stand out from the pack.

But even if all of these albums legitimately merit the score they’ve received — and that’s a huge if — how are we supposed to swim in this sea?

If almost everything falls between “pretty good” and “also pretty good, actually” then how is that functioning as an evaluative tool?

I can understand why some writers decide to fight the system by boldly discussing more records that they actively dislike (though that’s also a surefire clickbait formula, admittedly).

For my part, I’ve chosen to share only what I do like; you can be the one to assign the score, if you need it.

Need a playlist? Have a playlist.

We’re All Using Different Measuring Systems

Tennis wanted Pitchfork to rate them based on their full body of work, so that readers/listeners would be able to track their progression over the course of their career.

“What are we being rated against if not ourselves?”

I have many alternatives to offer, but I’ll save them for another day. Right now, this is they key:

There is no industry standard for scoring albums.

Not all critics contextualize the albums they review within the rest of a musician’s oeuvre, but plenty do. If two critics taking these two different approaches are reviewing the same album, they might come to two wildly different conclusions.

What’s more, plenty of artists would demand the opposite of what Tennis did.

Imagine you’re in a band that just made a big style change on a new release. Wouldn’t you rather your work be judged fresh in that context?

More to the point, there’s no accounting for taste. One guy’s 9 is another’s 5, and while there’s nothing wrong with that, it turns scoring into a useless shorthand. Even Pitchfork has rewritten history, for crying out loud.

Not Everything Needs a Number

I’m not saying that there’s no place for traditional music criticism anymore. But it’s been a long time since I’ve used reviews for music discovery, and I never pay attention to scores when I make my own recommendations.

If I did, I would miss out on records that have since become favorites.

So, I’m done with album scores. I’m done with spreadsheets. I’m done with obsessively ranking new releases when I can’t even decide on a scoring system that works just for me.

I’m just going to keep sharing whatever it is that I enjoy, from whatever chaotic system that’s knocking around in my brain, and leave the scoring to everyone else — it’s not like it’s going anywhere anytime soon.

Sins that every music critic is guilty of, as far as I’m concerned. But I’m not trying to put Tennis in the hot seat here, for what it’s worth; I’m just stealing their talking points.

This makes me think two things:

1) Reviews are generally self-selecting (as you’ve pointed out)

2) Most music that gets to be reviewed is “kinda fine” and ranking it 7 seems to be shorthand for “I didn’t love this but I can’t put my finger on why it’s not amazing, so this score seems fine”. There’s also the “wisdom” of crowds. We’re now in a situation where scoring an album 5/10 (which should be the average score) is seen as a huge diss.

I’m interested in why this issue doesn’t seem to apply to film or literature though.

Scores are so arbitrary as to be meaningless. If a score is really high or really low, it’ll pique my curiosity, but that’s about it.

As for reviews, I like doing ‘em, but really it’s more curation than anything; I’m sharing stuff I like with readers I hope’ll like it too. Life’s too short to listen to bad music, and the internets already flooded with hate reads anyway…